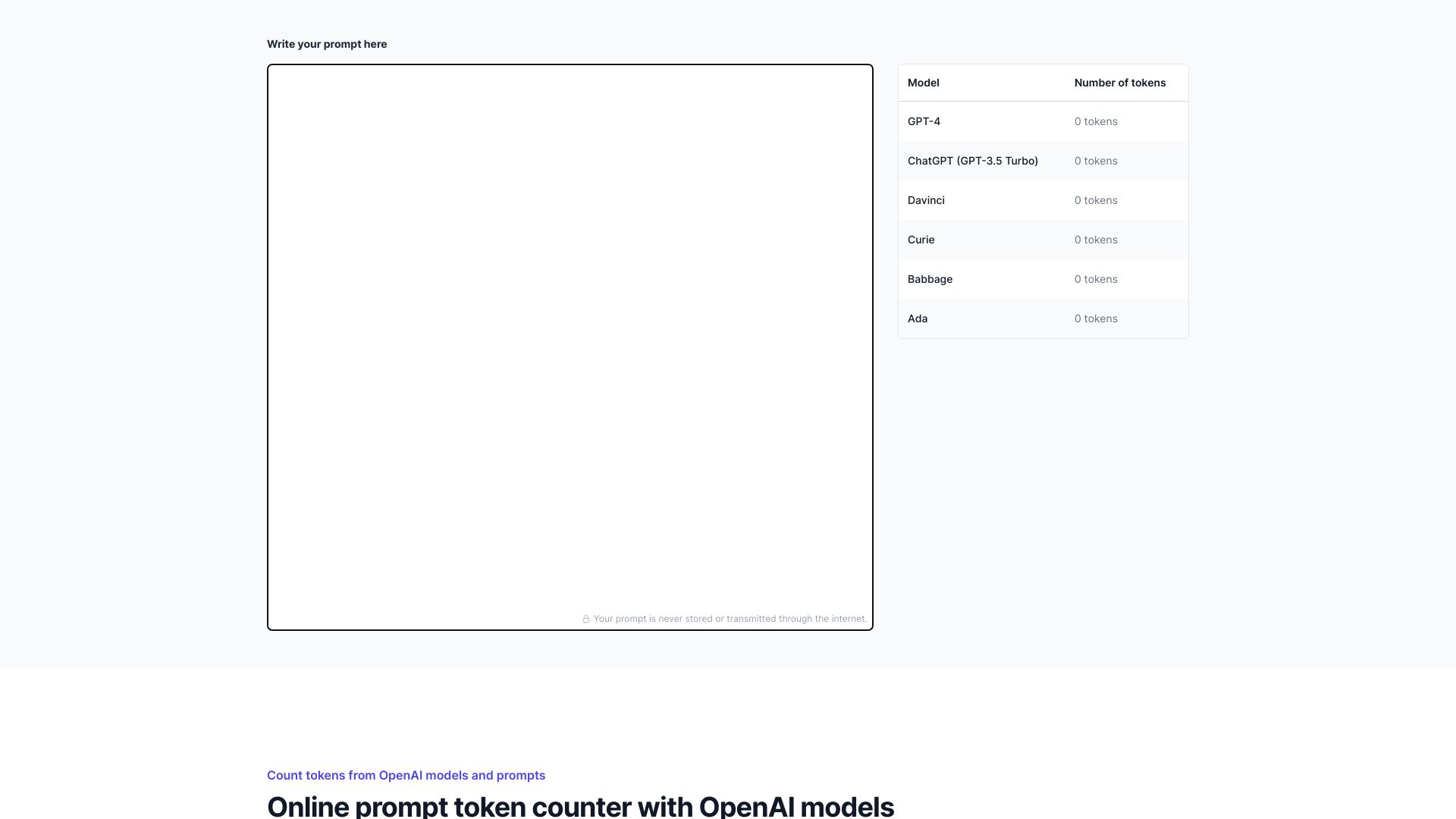

What is Prompt Token Counter for OpenAI Models?

An essential online utility for content creators and developers working with OpenAI models. This tool meticulously counts tokens in prompts to ensure optimal performance and efficiency for AI-generated content.

How to use Prompt Token Counter for OpenAI Models?

Simply paste your prompt into the input field. The tool instantly displays token count. Adjust your prompts to stay within model limits, optimize performance, and manage costs effectively.

Core features of Prompt Token Counter for OpenAI Models?

- Real-time token counting with instant results

- Accurate calculations for all OpenAI model types

- Visual indicators for token limits

- Optimization suggestions to improve efficiency

- Export functionality for token usage reports